“The limits of my language means the limits of my world.”

(L. Wittgenstein)

When Jacques Lacan proposed his psychoanalytical theory based on the influence of language on human beings, many auditors remained initially astonished. Is language an actual limitation? In the popular culture, it isn’t. It cannot be! But, in a world where we keep on working with internal representations, it’s much more than a limitation: it’s a golden cage without a way out.

First, an internal representation needs an external environment; under some conditions, it must also be shared by a concrete number of people. A jungle, just like any other natural place, is a perfect starting point. However, an internal representation is more than a placeholder. Thinking this way drives to dramatic mistakes. The word “tree” is not an actual tree and never will be, but “tree” is an entity that transforms an environmental element into something else. Let’s call it, though. It doesn’t matter. The only important thing is that such a new entity is a brick for an intelligent line of reasoning.

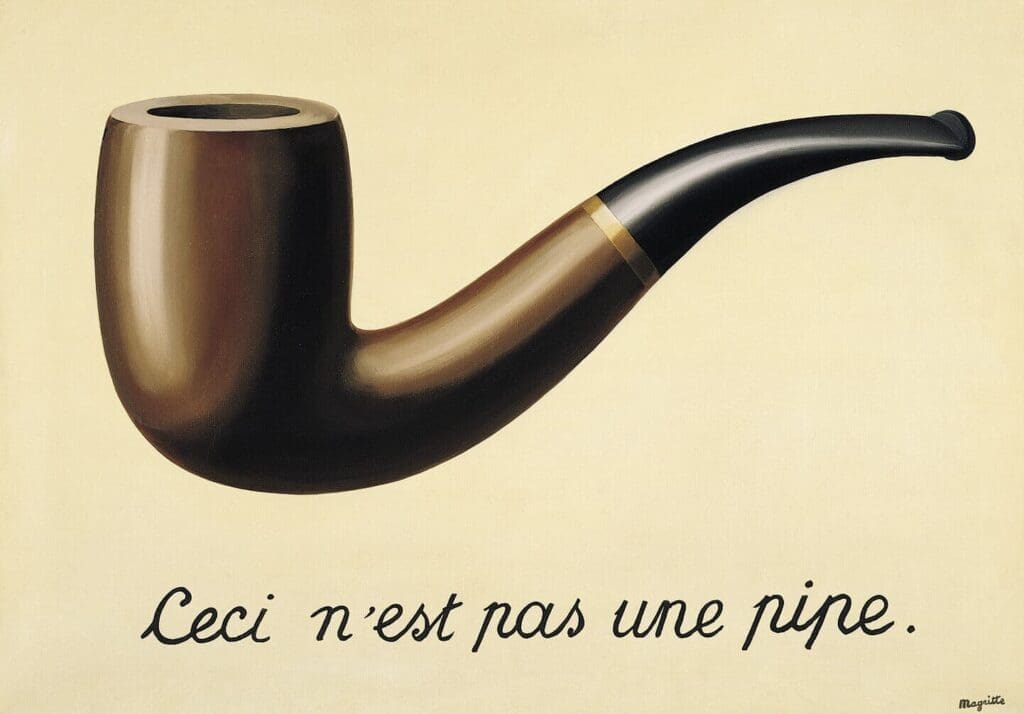

Let’s consider another example: “The Treachery of Images,” painted by René Magritte. Of course, the painting is not a pipe, but from a particular viewpoint, it is! It’s a “pipe” because it can trigger an internal representational chain. A line that can start from the color of the wood and ends in the face of a tobacconist who sells similar pipes. Similar pipes? Does he sell paintings or posters? No… Of course. That’s the representational link! In our mind, that “pipe” is potentially any pipe (with any shape, color, material, and so forth); therefore, it is much more than a singular object. Language has turned it into an almost almighty abstract representation.

Now, the first question arises: “What about Artificial Intelligence?” We can surely state that all these concepts cope with intelligence, and, with minimal effort, it’s possible to find hundreds of papers whose goals are related to the idea to model abstract representational entities and manipulate them in complex tasks. A Chatbot is a clear example of this approach. Of course, I’m not talking about domain-specific bots (a little bit more flexible FAQs), but of all those attempts to create a program to chat with. A program that can answer questions like: “How are you?” (maybe it could be more interesting a question like “Which is the Vbe of your 345365th transistor?”) or “Did you enjoy your last trip?” (Where? Does a “where” exist for the chatbot?).

A couple of months ago, Facebook experiments failed in reproducing a good English verbal interaction (a language where the abstraction of numbers regressed into the repetition of the same placeholder word… “I give one one one things…. You have two cards), a huge mass of (intelligent, though) people started tweeting and posting: “An artificial intelligence created his – maybe it would be better ‘its’ – own language” and thousands of comments appeared like mushrooms after a storm.

Don’t be surprised: an artificial intelligence created its language. That’s true. If you train a Word2Vec model, the internal word embedding is an artificial representation that a human being can’t understand (when the number of dimensions is higher than 3). But what about the context? The environment is absent. The reason is simple: these models are trained with human-generated data and learn to map (think about a Seq2Seq model) an input to an output. (Un)fortunately, this process needed a background: Magritte needed a real pipe (maybe different, but probably an object to smoke from), and the first cave-dwellers needed real trees and real animals before painting the hunting scenes upon the rocks. A map based on external relationships (defined by some mysterious agent) can explain Pavlovian conditioning but cannot justify how language can co-create reality.

I’m not a pessimist. I do believe in science and technology. But, at the same time, I hate marketing! Even when it’s AI marketing. Do we want to study how artificial intelligence can develop a language? Well, let’s first define the environment. A robot that can explore a park has many more chances than a program trained with a huge sentence dataset. Again, the point is on the goal: do we expect the robot to explore because we need it? If so, a proto-language can emerge as necessary (reinforcement signals can be encoded and stored somehow). Does it have a semantic structure? Yes, if we don’t pretend to “export” our semantics. No, if we keep asking the robot: “Do you like the grass?” (The situation can worsen when somebody thinks of finding an AI friend to chat with).

Moreover, let’s forget about English or Esperanto. A language must emerge with its rules, alphabet, and maybe with its own “shades.” A translation (if such robots are interested…) can be a later job. Trying to anticipate it, even if we are a conquering people, can only drive us to “empty clones,” which are even worse than cellular automata (beautiful mathematical expressions).

I always remember and repeat the dialogue between Alan Turing and the policeman in “The Imitation Game.” The officer asked: “Can a machine think?” and he answered: “Yes, it can. But in its way.”

If you like this post, you can always donate to support my activity! One coffee is enough!