Foreword

Such an approach to the problem indeed leads toward a conception of mental activity that is completely disengaged from the limits of inert matter: man, though made of atoms precisely like a rock, is “compelled” to possess a kind of vis viva that transmutes his objectively material nature into a homolog definable only in metaphysical terms.

All this has led in the last two decades to a heated debate between those who think that a distinction between mind and brain is ontologically necessary and those, like me, who argue that what we insist on calling the mind is nothing more than the result of the activity, objective and physically analyzable, of the central nervous system.

Thanks to modern brain imaging techniques such as PET or functional magnetic resonance imaging, it has been possible to prove experimentally that some regions of the brain are activated only when the test subject is subjected to particular tests; for example, if one is asked to perform a not-too-trivial numerical calculation, there is marked bioelectrical activity in the left hemisphere of the brain, while if the test involves the mental reading of a text one can see how Wernicke’s area, responsible for semantic decoding, comes into action, enabling the individual to understand what he or she is reading.

Of course, it is absurd to think that an exact localization of every brain function can be made since, as we shall see, the structure of the brain itself is such that it allows a fusion of information flows from different sources.

It is precisely because of this peculiarity that we can tackle tasks every day that are broken down into sub-problems, which would be prohibitive for even the most powerful supercomputer. As Alberto Oliverio points out, in the problem of reading, it is necessary to be able to decode about 40 characters per second without any constraint on their shapes and characteristics; if I had decided to write this article using a particularly elaborate font (at the limit, think of manuscripts) my brain would still have had no particular difficulty in guiding my fingers to the correct keys, and, rereading what I wrote, I would still have understood the meaning of each sentence.

A “static,” i.e., strongly localizing, brain would find itself in extreme difficulty whenever alternatives–functionally and structurally compatible–to the pre-stored structures were to be presented; to attempt to understand how this could happen, we must necessarily go back in time and question the very great philosopher Immanuel Kant: for it was he who was the first researcher to ask why the mental reworking of a concept was almost entirely independent of the particular experience that had previously led us to it. The first time I read the Critique of Pure Reason, I was greatly disturbed by the understanding demonstrated by Kant when he explained, without reference to later cognitive psychology, why, for example, my idea of “home” was untethered from the building I live in or what I observe looking out my window.

His investigation started from the assumption that the thought of an object is not formed from a “cataloging” of perceptions but rather from a synthesis of a manifold that arises from a series of incoming information: the “house” – understood as a concept/object – is broken down into its peculiar parts and, once the idea attached to it has become sufficiently stable, the brain re-purposes this data to be able to “build” a house based on current experiences or, even just imagination.

Of course, Kant did not possess the necessary means of investigation to be able, following Galileo’s teachings, to confirm his hypotheses experimentally and consequently allowed himself to be guided only by the intellect; today, however, the situation has been clarified (not wholly, but enough to write a little article on it!) and it has been given the name “generalization capacity.” The entire remainder of this writing will be devoted to this feature of the mind, so I ask the reader to have a modicum of patience so that I can momentarily return to the question that serves as the incipit of the article, “How natural is artificial intelligence?” to clarify some essential points.

If one were to ask a person whether his or her intelligence is natural, it is evident that the answer would undoubtedly be in the affirmative. Still, if one were to ask what he or she means by “natural,” one could collect so many explanations that one could compile a new Encyclopedia Britannica. Each of us is convinced that we are a member of nature, meaning that our bodies and minds were formed from processes that cannot in any way be called artificial. This is true. However, we are interested in understanding whether there can be any distinction between a human and a hypothetical thinking machine: what parameters need to be considered? More importantly, what tests should both parties undergo?

A first approach might be to ask the machine if it is natural (this goes back to the famous Turing test), but what value could this answer have? A possible “yes” or its opposite would not enlighten us anymore; it is evident that man builds a computer, but it is equally apparent that the electrons, protons, and neutrons that compose it are the same both within its microprocessor and in the brain, heart, and liver of the human subject: both are “built” from the same elements, but while the machine is cold and inexpressive, the person manifests characteristics that we would call “emotional.”

One might then ask whether it is the emotions that make the difference. Still, while respecting those who think they are a kind of “divine inspiration,” it is fair to point out that what we call fear, anxiety, or happiness is translatable into a whole series of perceptions-brain processing-self-conditioning due to neurotransmitters produced by neurons and certain hormones secreted by the adrenal glands. In other words, emotion is an internal state that arises from any cause but develops following a script our organism knows exceptionally well. (If it were not so, the sight of a giant snake might activate selective mechanisms that “repeat from within” the phrase “Don’t panic!” but unfortunately, automatic control of reactions applies to machines as well, almost always preemptive and challenging to modify by the organism itself.)

Discarding, therefore, even the emotion hypothesis, all that remains refers to pure intellectual abilities, logic, abstract thinking, and artistic abilities. In the early days of artificial intelligence, great pioneers such as Marvin Minsky proposed what for many years to follow was the “methodology” to be adopted to deal with problems of particular complexity; in particular, their idea was based on the assumption that the adjective “artificial” referred not so much to the intelligence of the machine, but rather to the fact that a good programmer could write innovative algorithms capable of coping with computationally heavy situations.

In practice, following this vein, the answer to our initial question can only be, “Artificial intelligence is as natural as a computer is,” with the difference being that, while humans are capable of abstracting but not of fast computation, the calculator, suitably set up by the operator, is virtually capable of performing both tasks. The main problem, however, arises precisely from the fact that without the help of the human expert, it is not practically possible to make that intelligence transition that can improve the behavior of automated machines.

Fortunately, research has gone beyond the very close boundary drawn by the fathers of the now so-called “classical” artificial intelligence. The most amazing thing has been not so much the change in strategy but rather the idea that a computer can only become more intelligent if it operationally and structurally mimics the animal organs responsible for performing all the various control and processing functions.

Let’s start with the results of neurophysiology. It was planned to implement particular structures (neural networks) that would function similarly to their natural counterparts; in this way, which we will not describe for lack of space, we immediately see that the role of the programmer was no longer central but was assuming an increasingly marginal position to leave room for a semi-autonomous internal evolution guided only by the goals one wished to achieve.

For example, with a network of 20 neurons, it can be made to learn (learning understood as changing some characteristic parameters) to recognize the letters of the alphabet and easily recognize a distorted character.

By now, many personal computer software programs are based on this approach. Think of so-called OCRs, i.e., those programs that are capable of converting an image containing text into an electronic document or the sophisticated tools used by the police to compare a suspicious face with those contained in their database; these, of course, are only trivial examples, but connection-based artificial intelligence is now so widespread as to be an essential requirement for any self-respecting intelligent systems programmer.

It is clear that now the answer to the question is slowly beginning to turn toward a positive affirmation. However, it is still unclear why a simple change of course has brought about a revolution whose scope is understandable only to science fiction writers, but we will discuss this at length in the next section.

The ability to generalize and abstract

I believe that any person would have no hesitation in saying that if the anti-dualistic hypothesis of the mind is substantiated, it is the brain with its activity that is the actual cause of intelligence, and indeed, the opposite would be denied; now, if we apply causation to our problem, the question to be asked is: what guideline should we follow to arrive at a concrete result in the field of artificial intelligence? If we were to rely solely on classical theories, the path would be dictated by the progression: effect → cause having found that a good programmer can “teach” a machine to perform complex tasks, the main goal should be to fix the desired effect to try to achieve it with any cause (program).

But such a methodology has a side effect, precisely that of distancing us from nature to make us strive for a result that does not arise from the same effects in an animal brain. In contrast, connectionism proceeds according to the inverse relationship: cause → effect. That is, it starts from the observation of the bioelectric functioning of the neurons that make up the central nervous system of living organisms and proceeds by a mechanism called “reverse engineering,” that is, by trying, through simulation and field experiment, to trace the root causes that generate all the effects of intelligence.

Michelangelo repeated that he saw a statue within each marble block, and his role was only to remove the excess parts! This sentence can undoubtedly be considered the subtitle to the emblem of modern artificial intelligence, with the only difference being that while the sculptor could make use of his boundless imagination, the scientist has to stick to observations of reality; in any case, believe me, until a century or so ago, between a block of travertine and the brain there was, in the view of most people, almost no difference!

Only around 1960 did it begin to be realized that the only way to unravel the skein was to try to reproduce basic nerve structures and observe their emergent properties; the arrogant attempt to force nature with increasingly sophisticated algorithms then gave way to a more cautious analysis of factual data followed by careful experimentation. But what are the emergent properties we have mentioned?

If you want to think metaphorically, the Pieta or the David are emergent properties within the marble-activity block context of Michelangelo; similarly, it is possible to see that an artificial neural network “reveals” its secrets not so much at the design stage that is, when the decision is made to implement it through a computer program, but rather during its operation. It is as if a veil is slowly removed from a painting only when there are observers who pay attention to it. Otherwise, the canvas remains concealed, and, at most, one may know of its existence through the effect its presence produces within a museum but without being able to study it, let alone replicate it.

Now, I would not want the image of the neuro-engineering researcher to be associated with that of the artistic forger, not so much because I despise the latter’s activity, but because the goal of artificial intelligence is not to produce “clones,” if anything to build machines that can express their potential in the same way as most higher-order living beings. In other words, this discipline must take its inspiration from nature, but it cannot think of expressing its results according to the same canons, as this would not be convenient in any way.

However, at this point, it almost seems the initial question is answered negatively by what has been said so far: it is not. The error could arise only if one understood the subject of the question (the artificial intelligence) to be linked to the nominal predicate (it is natural) by a restraining relation; in that sense, logic would immediately suggest that there is an apparent contradiction and it is right to eliminate it.

But if we analyze the properties of artificial systems and those of the corresponding natural ones, everything changes because the relationship becomes a “simple” analogy. Let me explain: The connectionist approach is natural not because it uses living cells like biology but because it artificially re-implements organic structures (trying to be as faithful to the original as possible) and sticks to their functioning without forcing their dynamics.

On the other hand, there would be no other way to observe emergent properties that, within the classical framework, would become pre-conditioned and pre-coded; a significant example is the so-called SOMs – Self Organizing Maps – that is, particular self-organizing neural networks that can store information almost autonomously, should one think of doing the same following the classical strategies one would have to write a program capable of placing every single piece of data in a particular location and the whole process would be practically known a priori.

With SOMs, on the contrary, the user/programmer does not know which memory locations (broadly understood) will be used since it is the network itself that chooses them according to an associative-type principle; for example, two faces with very similar physiognomy will be placed in relatively close places, in this way when retrieving information one should not proceed as if there were some schedule, but rather by providing the network with the peculiar elements to be searched by letting it become more active precisely at those data that have a more pronounced similarity.

This example has clarified the difference between an “unnatural” and a “natural” approach. I hope that the reader will be convinced that the territory of neuroengineering is far from being flat and well-marked, but that there are references-our mind and its properties can slowly guide science both toward the psychological understanding of humans and toward the acquisition of design capabilities that can enable the realization of machines ever closer to the behavioral modus operandi of living beings.

But let us return to the generalization capability we mentioned in the first paragraph: the example of SOMs should already have made it partly clear that a static structure can provide a maximum amount of information equal to the amount of information pre-stored; in contrast, a neural network trained with a limited data set can produce outputs (i.e., results) in theoretically infinite numbers.

Let us think of an alphabet of 21 symbols written in Times New Roman font and associate each letter image with an output symbol (e.g., a number); when learning is complete, we choose any letter with a different font, such as Arial or Helvetica, or deform the original randomly; submitting this sample as input, unless the image is entirely unrecognizable-this amounts to a total loss of information-we will get the number corresponding to the correct letter as output. The network generalized the original sample by “generating” a scattering of possible alternatives to it that allows the recognition of similar shapes; note that the word “similar” does not have a definite mathematical meaning!

A classical algorithm can make comparisons and eventually evaluate the correlation between the provided and stored images, but this process leads to a set of point sizes that represent the level of similarity; well, there is no guarantee that two completely different images will lead to different correlations. Indeed, it can happen precisely that, for example, a T and F, when compared with an R, give a value that is, if not precisely identical, very close and thus easily altered by the finite precision of calculators.

It is clear, therefore, that the basic principle of a neural network allows this obstacle to be circumvented, and provided the design is well structured, it is possible to say that with a tiny number of operations, a much better result can be achieved than any other adoptable solution.

At this point, it is good to remember that the goal of the research is to approach nature, not to exceed it: even a perfectly trained network can make mistakes, but this should not come as much of a surprise because generalization is still limited even in humans; moreover, many surprising results have been achieved using a few dozen interconnected neurons, whereas, a person’s brain has about 150 billion neurons and each of them can have as many as 20000 interconnections (the Purkinje cells of the cerebellum can have as many as 200000!).

So far, artificial neural networks of this size have never been implemented because the memory and power required are practically prohibitive. Still, shortly, thanks to the downward trend in the price/performance ratio of hardware, it will be possible to do increasingly complex experiments. Researchers such as Igor Aleksander of Imperial College London have already developed highly versatile intelligent devices capable of generalization and abstraction in a rather promising way, and the computers used were always in the mid-to-low cost workstation range.

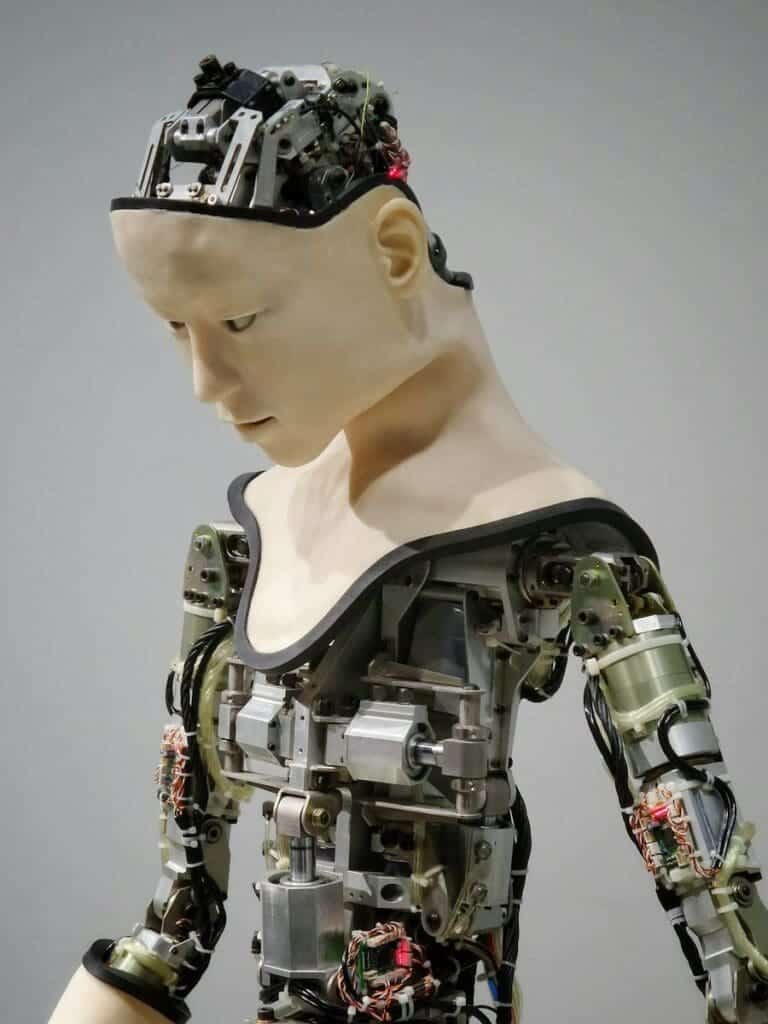

But what goal can be achieved, besides pure generalization, by training a neural network? The field where fascinating experiments can be done is undoubtedly that of robotics: anthropomorphic robots, for example, can move within a complex, unstructured environment and, thanks to the possibility of possessing internal states (think of the sensation experienced when eating an apple: well it is the internal state caused by the gustatory sensory input associated with the apple).

They can represent the scenario in which they move and the elements with which they interact. In practice, such a machine can behave like a primitive man, cautiously exploring his natural macrocosm and learning to identify its component objects; slowly, in the brain of the primordial man/robot, associations begin to form through the connections between different neurons, which, as happens in children, subsequently undergo a process of “pruning” aimed at eliminating redundancies and specializing individual areas.

When we talked about SOMs, we saw that they are organized to store information; it is clear that after a certain amount of time during which there has been a flow of incoming data, the network begins to saturate; that is, it is no longer able to specialize its areas to allow proper data retrieval.

This process also happens in humans, and the only way to prevent it is to filter perceptual information through a series of stages whose task is to select only the primary content and eliminate anything unnecessary.

An artificial system can function almost the same way: it only needs to manage a short-term memory-think of a computer’s RAM-that receives sensory streams and a long-term memory (more extensive than the former but still limited) where the fundamental information is placed.

The transition from the former to the latter is governed by a process that becomes more and more selective as the robot analyzes and learns about the environment and the agents in it: at first, almost all information must pass into the MLT, thus ensuring a solid starting point for learning, but after a specific time, which in humans ends in early childhood, this process must necessarily reduce both to avoid overcrowding of the MLT, but also and above all to leave room for the ability to generalize.

The latter is highly dependent on the “degrees of freedom” a network possesses: if too many neurons or too many synaptic connections between them are used, there is a risk of mnemonic learning but a poor aptitude for grasping similarities, whereas if continuous adaptive pruning is performed, one may be able to keep the network in a condition to both remember and abstract.

Conclusions

How natural is artificial intelligence? Much, a little, or nothing… It all depends on the strategy that is to be adopted and, unfortunately, also on the preconceptions that have often clouded the vision of scientists: a good program is capable of coping with a variety of problems optimally, but it is closed and limited, nothing can be expected beyond the objectives that were considered in the design phase.

A connectionist approach, in conjunction with the results provided by the cognitive sciences, can cross the boundary of the initial idea and “overflow” into entirely unexplored territory, just as happens in children who, from completely immature beings, slowly become increasingly formed persons both physically (and this is dictated by the very characteristics of the environment in which they find themselves living) and psychologically.

Maturity represents the culmination of adaptive brain processes. It can be easily characterized by all the achievements of the individual mind: motor coordination, language, the ability to read and write, logical-mathematical skills, etc.

An artificial system must possess the basic fundamental structures to follow the same development path. Still, at the same time, it is essential for the agent, or robot, to be able to interface with a varied environment that provides it with as many stimuli as possible; in other words, one must realize that in this area, research must compulsorily be very speculative; if one aims at producing intelligent software but with a sparse set of action possibilities, the result can only remain within today’s standards or improve only slightly; on the contrary, if one invests in the area of context-free applications (such as anthropomorphic robots or virtual agents with semantic-syntactic capabilities), it is much more likely that one will in a short time reach a long series of achievements that, at best, may culminate precisely in the realization of machines endowed with natural intelligence.

To conclude this article, I would like to mention that Galileo Galilei himself, the father of modern science, was the first to point out that if one wishes to study nature, one must first respect it: theories are always welcome, but the scientist must still rely on experiment both as a means of validating them and finding new insights. Artificial intelligence is science only and exclusively when it starts from the fundamental reality (the intelligence of living beings), studies it, and, only after understanding at least some aspect of it, attempts to model it with the means provided by modern technology; on the other hand, what would be the point of talking about something that must be artificial but at the same time reflects a natural reality without having recourse to the very sources available to man?

Software engineering can help, but, in my opinion, the only natural way forward is the one mapped out by neuroscience and cognitive psychology, which, together with the results of mathematics and physics, can truly open the door to a new and fascinating millennium of achievement!

Bibliographical references (in Italian)

-

- Oliverio A., “Prima Lezione di Neuroscienze”, Editori Laterza

- Floreano D., Mattiussi C., “Manuale sulle Reti Neurali”, Edizioni il Mulino

- Parisi D., “Mente: i nuovi modelli di vita artificiale”, Edizioni il Mulino

- Legrenzi P., “Prima Lezione di Scienze Cognitive”, Editori Laterza

- Aleksander I., “Come si costruisce una mente”, Einaudi

- Neisser U., “Conoscenza e realtà”, Edizioni il Mulino

- Penrose R., “La mente nuova dell’imperatore”, Edizioni SuperBur

The article was initially published on “La Rivista del Galilei” by Edizioni Bruno Mondadori.

The essay is also featured in the volume“Saggi sull’Intelligenza Artificiale e la Filosofia della Mente.”

If you like this essay, you can always donate to support my activity! One coffee is enough!