The pros and cons of the Turing Test

A behavioral test then, the purpose of which was not to identify if and where there might be intelligence, but rather to assess the degree to which the artificial system was able to give consonant and displacing responses; of course, when talking about the machine in this context, it is always important to point out that no mention is ever made of the hardware requirements needed to be able to achieve a particular result. Turing himself based his statements more on foresight than on awareness, and on [1] on p. 64, he writes: ” …I believe that in about fifty years it will be possible to program computers having memory capacities of about 109 so that they will play the imitation game so well that an average questioner will have a probability of no more than 70 percent of making the correct identification after five minutes of questioning. “.

The reason for this reasonably high demand (on the order of a gigabyte) for memory is precisely because of the computational approach that Turing wished to follow: what he was interested in was not the external structure and any raw functionality but rather the program, i.e., what we, countering John Searle’s claims, call intentionality of the machine. A device capable of passing the Turing test (thus fooling even the most astute questioner) is nothing more than a program, more or less varied, that must be able to make appropriate connections between questions and answers, but beware!

I did not say that it must be able to implement only and exclusively a thoughtful associative process but that its relationship with the interlocutor must necessarily be based on a dialogue. As we shall see later, this approach is itself the clear disadvantage of the machine and is the cause of the heated debate that will culminate in the virtual Chinese room experiment proposed by Searle. In formulating his imitation game, I believe that Turing did not intend to exaggerate the concept of a program to the point of prompting many researchers to create two distinct factions (the strong AI faction and its opposite). It is well understood that much research contemporaneous with the release of his paper “Computing Machinery and Intelligence” was still in its embryonic stage.

The finite-state machine and the digital calculator represented for many an achievement of extraordinary human inventiveness, and there were quite a few filmmakers who were ahead of their time and animated large piles of scrap metal to the point of making them appear humanoid; however, today the situation has changed dramatically, and much enthusiasm has given way to a more cautious analysis of the facts.

And that is precisely where I intend to begin my talk. The Turing test is, as we have seen, behaviorist, but it is also undoubtedly very subjective since the questioner is the ultimate judge of whether he is faced with a man or a machine; that is, he will have to compare the behavior (in terms of responses) of the interlocutor with that of a hypothetical person of average culture and ability. But how can one always be certain that a certain dialogue cannot be human while another one is? Also included in the test is the possibility of bluffing, which, if wisely used, can jettison any glimmer of determinism in the decision; for example, if you were to read this dialogue:

-

- A) What is your name?

- B) xT334GhhdrN&353

- A) Are you a machine?

- B) 2rer%6gghd

- A) What do you think about genetic engineering?

- B) R&fffdwe55333

….

Can you tell who the man is and who the machine is? At first glance, everyone would answer that B is not only not human but also programmed very poorly! But are you sure?

A may be a program that asks questions, and B is a prankster who enjoys confusing ideas… Bluffing is capable of subverting many certainties, and for this reason, one must be highly cautious when making “blind” assessments.

Everything would be different if the two interlocutors were arranged opposite each other and there were no telecommunication systems to drive the machine remotely. In this case, almost any doubt would be dispelled. I said “almost” because nothing prohibits the machine from fooling around! When asked what your source of energy is, it might very well answer “fats and sugars,” or, in an extreme case, it might bring up on the screen a “System Error. Buffer Overflow,” at which any questioner with little patience would be entitled to stand up and laugh in the face of the engineers… The Turing test, in its simple genius, also provides for this!

However, direct contact with the machine, whatever it may be, is always a source of unpleasant prejudices, which Alan Turing himself points out: ” …In the course of one’s life, a man sees thousands of machines and, from what he sees of them, draws a large number of general conclusions: they are ugly, they are each designed for a definite purpose, and when one wants to use them for even a slightly different purpose they become useless; the variety of behavior of each of them is limited, etc., etc. …”

The psychological induction that leads us to extend the characteristics of one specimen to the whole species has always been robust. Still, in this area, the entrenchment of pseudo-dualistic ideas has often gotten the better of those who cannot reach a firm position. Many biologists and philosophers have rightly asked: Is it true that passing the Turing test confers intelligence on the machine? For those who would like to explore this aspect critically, I recommend reading the chapter “The Turing Test: A Conversation at the Coffee Shop” by D. Hofstadter on p. 76 of [1], but for now, let us observe, as we have done before, that the Imitation Game is at a distinct disadvantage for the machine: it is forced to answer a series of questions posed in an abstract language with no semantic correspondence; besides, as Daniel Dennet writes, ” …the assumption Turing was prepared to make was that nothing could ever pass the Turing test by winning the Imitation Game without also being capable of performing an indefinite number of other manifestly intelligent actions…. “.

Think of asking a computer what it thinks about apples. At best, the only acceptable answer might be:

” Apple is a fruit enjoyed by human beings and therefore also by me ! “the idea of an apple possessed by the questioner is undoubtedly different from the mental state (if any) of the machine, and thus, many semantic inferences would remain vain attempts to reconcile with syntax and, at best, one more opportunity to convince the onlooker that it (the machine) is, in fact, a human.

But the machine is not a man! While it is always possible to accept the opposite, however, one should not confuse a particular concept (man) with a much broader category; a good computer is a machine, and no law of physics prevents it from behaving intelligently, developing autonomous thought, and interacting with the world (of which it is rightly a part). Still, at the same time, there is no reason to suppose that its intelligence must necessarily conform behaviorally (and especially structurally) with ours. The machine might reject the dialogue if the language is not correct, and attempting to extract information from it would be useless without first accepting some existential autonomy.

We will have a chance to discuss the internal states of an artificial system later. Still, right now, I would like to point out that, in my opinion, it is too easy to fall victim to beautiful illusions when confronted with a large computer churning out pre-imagined answers…

There is nothing intelligent about this, not even if the inferences that select the alternatives take place most logically and rigorously. The simple reason is that the machine “knows” that it must answer B to question A only because a program prescribes so. Still, it has no idea of the program or the subterfuges to be adopted should it wish to circumvent the inexorable algorithmic dictates.

Such a machine is doomed to be an excellent example of data storage and management software, nothing more. I believe, therefore, that Turing’s initial question, “Can a machine think ?” found no real example in those hypothetical systems capable of sustaining the imitation game: a computer would not have to imitate anything. At most, he could behave like an excellent bilingual interpreter, speaking to an auditorium in Italian but still thinking in his native language. Indeed, mental images are the inherent hallmarks of thought, and the drastic decision to suppress them altogether by creating a purely associative system is tantamount to destroying any chance that artificial intelligence can make progress.

Internal states provide a representation of the machine’s status quo at a specific point in space-time, and the answer to a question is necessarily influenced by it; as is the case in humans, the artificial system may be distracted, listless, focused on quite other matters, and all because its internal activity is almost entirely independent of the forced stimulations that a tedious questioner may continue to make. However, as Dennet himself points out in [1], ” …another problem raised but not solved in Hofstadter’s dialogue concerns representation. When you simulate something on a computer, you usually get a detailed, “automated” and multidimensional representation of that thing, but of course, there is an abysmal difference between representation and reality, isn’t there ?…. ” Of course!

There is and should be a difference, especially when dealing with intelligent machines. Suppose you write a good program for simulating volcanic eruptions. In that case, you do so to evaluate aspects of reality that, if they were to occur, would lead to catastrophic consequences, but our case is very different.

We do not want to simulate anything, neither what is commonly called the “human mind” nor even the kind of intelligence that schoolchildren every day strive to develop. I want to reiterate that a machine may participate in the imitation game for the sake of Turing’s soul, but that does not mean that it is destined to have to live in the shadow of who knows what supreme entity of which it is only a pale reflection.

Weather forecasting always deals with multivariable systems handled by the Navier-Stokes equations, but rightly so. As Hofstadter pointed out, it has never happened that a cloud cluster has triggered a thunderstorm inside a laboratory… Those are simulations, that is, scientifically calibrated imitations; their ultimate purpose is to pander to the laws of physics in particular situations. An intelligent machine, on the contrary, does not simulate anything since if one of its internal variables takes the value 5, it has the value 5, and that number does not exist outside that specific reality since it is in its own right a state of mind; if, on the other hand, I run flight simulation software, the altitude I read on the screen, although also a variable, is existentially meaningless. With my interpretation, I understand that that signal warns me of something, and without proper awareness, it may remain a mere number printed on a screen.

For this reason, it is most important to emphasize that there are no intelligence simulators! Intelligence is an autonomous property that, when you try to replicate it, vanishes like a bubble propelled by a breath…

It indeed emerges from a functional architecture, but there is no rational method (solipsism) to be sure that a certain organism thinks and reasons as I am doing now. The only escape route arises from a shrewd application of the principle of induction, which, in this case, states that an individual capable of sustaining a dialogue for a certain amount of time cannot be a fool!

On this basis, the Turing test was born and developed, a test that, as we have been able to ascertain, must necessarily “unveil”-if there is one existence of internal mental states through the discovery of behaviors that, for practical reason alone, approximate those of a human being. However, I do not wish that the term “mental state,” in the view of a ridiculous “psyche simulation,” be barbarously translated into emotion or feeling…

We will address this issue extensively in the next section, but it is good to clarify it for now. Although researchers such as Goleman have repeatedly stressed the need to consider an intelligence called precisely emotional intelligence, this does not mean that the equation thought = emotion makes any logical sense. Emotions belong to the mental interpretation of internal states, and there they must remain. Bringing them up every time a scientist talks about AI is just a way of trying to boycott work that is based on factual realities.

What is the meaning of phrases like “That machine cannot love…”? The machine is not supposed to love, at least in the sense that we humans ascribe to the term, but this does not invalidate any intelligence it might have (perhaps it will have a particular internal state that amounts to a kind of love !); commensurate two physically and functionally different realities is dangerous and misleading, and the only result we get, if anything, is more confusion and the establishment of the prejudice that machines, as Turing said, are ugly, stupid and inflexible.

A machine that feels emotions

On my desk are stacked dozens of articles concerning AI, psychology, and cognitive science. You wouldn’t believe it, but in each of them, finding a brief reference to emotions is so certain that I could bet my entire library (the dearest thing I own) on it… In our case, the discussion can be summarized and schematized: can machines feel emotions?

Let us start at the beginning and, like good logicians, try to understand what these hated emotions are: suppose a person enters his apartment and, after a few steps, notices that a shadow is moving behind a large piece of furniture. What happens at the physiological level in that individual? The answer is simple: what we have all christened as fear appears. But what is fear? Neuroscientist Joseph LeDoux studies this very important process every day. Still, I am sure that if you asked him for a verbal (and simple) definition of terror, he would freeze up as anyone would.

After all, what we call emotion is just a particular state of mind that is superimposed on the current configuration by altering some of its characteristics to prepare the organism to implement a very specific procedure. For example, if one hears the word “Beware,” there are a variety of organic reactions, and some of them are associated with strong emotions. One places an exclamation mark after the term, and linguistically, the meaning changes. Suppose the person who utters it is someone who shouts at us for no apparent reason. In that case, this triggers a long series of processes (secretion of adrenaline, blocking of digestion, release of stored glucose, etc.) to cope with what is, in all probability, an imminent danger. This does not mean internal emotion arises and develops only for scientifically unknown reasons. The reasons are there!

Unfortunately, one is commonly inclined to attribute a valence related to the aftermath of a dualism now almost entirely extinct to the emotional sphere. When a researcher discovers a new process related to the genesis of an inner feeling, it has often witnessed a kind of ostracism against a heretic of the spiritual world. Just think about openly talking about cars and emotions. But since I fear no excommunication, I will do so anyway by trying to show how this apparent obstacle of artificial intelligence is nothing but a trivial way of considering the usual.

We said that a thinking machine must possess internal states. It must maintain a certain amount of energy as information even when the sources have completely died out; look at your closet for ten seconds, close your eyes, and what comes to mind? The closet, of course! Here is a simple example of an internal state: the image has left a trace in your brain processes, and even after it has disappeared, you remain mentally capable of operating with it and even perceptually exploring it figuratively. Now let’s make the same argument with emotion, the first meeting for example; it doesn’t matter how much time has passed since this event, anyone always has vivid images (in a broad sense) of his or her fears, sweat on the brow, joys, uncertainties, etc. Notice that almost all the terms in this list are emotions or particular feelings ascribable to them. Suppose you ask an interviewee, ” Do you remember the fear ? “. What kind of response do you expect?

That poor fellow will try hard to obtain additional information that will enable him to “locate” this fear to which you refer; on the other hand, if you specify right away that you intend to gather information about his first meeting, the subject will have no doubts in responding to you and perhaps even manage to provide me with a detailed description of the causes that led him to be fearful (she was lovely, he had an ugly car, he was clumsy, he could not speak, etc.).

What can be deduced from this? Emotions have no independent life; they exist in a given context and draw from it every existential type detail. The fear is not because of x and y in the context generated by the generic events x and y.

As we mentioned earlier, the emotion is superimposed on a pre-existing mental configuration, precisely like a red veil being spread over a white sofa: the resulting color arises from the sum of the red and the white and will be, in this case, a shade of pink, but if the sofa had been midnight blue the result would no longer be a tender color with pale hues, but rather a dark and optically powerful purple. Emotion filters reality and certainly influences its evolution within the limits of the possibilities offered by human-environment interaction.

But can the same happen to cars? I do not believe any caution is necessary in answering: it is undoubtedly affirmative! The most mind-boggling thing is that a simple program is all that is needed to enable experimentation with what has been stated: suppose you want to regulate the temperature of a room using a sophisticated gadget that is very sensitive to colors. For example, if the hues are green, the temperature will stabilize slightly higher than required. In contrast, if its color sensors detect a high presence of spectral components near red, it will decide to reduce the temperature further.

Now, take a room devoid of furniture, assume this implies neutrality for the controller, and set the thermostat to 20 °C. After a more or less long transient, the room will be air-conditioned to the desired value. The machine is, therefore, performing a task usually without any emotional influence, but if you suddenly decided to introduce a large table with greenish hues into the room, what would happen? The system would lower the temperature by two °C; its control program remained unchanged, yet it almost seemed a malfunction had occurred… If you are not too shocked, I will go as far as a more daring explanation. Otherwise, I suggest you skip the whole paragraph altogether!

What happened can be summarized as follows: at first, the internal state of the system corresponds to an ambient temperature of 20 °C, and this can be likened (metaphorically) to an individual walking down the street freely with 60 heartbeats per minute at a certain point a peculiar and unexpected event appears on the scene: the green table for the car, a breathtaking woman for the man. What happens? In the person, the pulse will rise rapidly because of the increased availability of adrenaline. She will have the impression (not a true impression, but rather the result of information provided by the internal sense) that she is experiencing a strong emotion; in the machine, on the other hand, that unexpected event will result in a lowering of the temperature set-point and it too “can” boast of an abnormal sensation in that it will find itself with an internal state (the temperature of the room, for our purposes) different from the one pre-stored. It is as if there were 20 °C (or 60 pulses), but for special reasons, the change in this value induces awareness of a significant event.

If, for example-allow me a science-fiction digression-, the regulator would “feed” on elements taken from red or green sources, it, through the unexpected temperature change, could predispose itself to “woo” a table or a curtain to wrest some energy from them!

Similarly, but less ironically, the individual experiencing the intense emotion of the encounter prepares (or attempts to prepare) for an approach whose only end is the achievement of carnal congress. It seems more than evident that normal mental activities are not “diverted” by emotions; if anything, as already mentioned, they filter their content and form and adapt them to a new developing reality.

“Much ado about nothing,” wrote Shakespeare, and there is no scientific territory where the dust kicked up is so thick that even in front of one’s eyes, one cannot see… Now, I would not want many psychologists to attack me by saying that emotion plays a vital functional role in a person’s life because I have not denied either this fact or that a good machine programmed in a broad sense to be intelligent could benefit from an interactive approach also based on these “informational surges.” What I wanted to emphasize is the excessive immateriality that is de facto conferred on these kinds of sensations, which, by the way, arise not from the ontogenetic and phylogenetic development of man but rather belong to the most primordial sphere of the encephalon. Joseph LeDoux, in his beautiful book “The Synaptic Self,” emphasizes precisely the role played by the amygdala in decoding emotions and, in particular, fear; in an article [7] that appeared in the Italian journal Mente&Cervello, Hubertus Breuer writes: ” …this was his (Ledoux’s n.d.GB) great discovery: a milestone in emotion research. He had found an archaic switching circuit by which rats can perceive the world independently of their cerebral cortex.

“This sensory system,” LeDoux explains, “presumably dates back to a very ancient stage of evolution. And it must have been of great help to vertebrates when the cerebral cortex was not yet developed. “. Thus, emotion is not the child of evolution that brought humans from a state of total ignorance to the present, but rather a legacy of the past that has been preserved through the millennia solely and exclusively because it can bypass conventional channels more quickly in all those situations that require it. The same author further writes: ” In everyday life, our brain receives simultaneously summary and detailed optical impressions. Therefore, LeDoux argues, we probably use two parallel pathways to assess the environment: ‘Quickly and unconsciously with the amygdala, to essay the situation; more slowly and consciously with the cortex, to recognize details.’ And this structure could apply to all five senses: the amygdala would examine all sensory perceptions for signs of danger. “. This inherent parallelism in information processing can and often is implemented even in artificial systems devoid of any intelligent parts: two paths are separated so that should something severe happen, a safety circuit could completely shut down the system. But then, I again ask myself the fateful question: can machines have emotions?

In light of what has been discovered in recent years, emotion is fundamental to life, but it is at the same time generated by a highly primordial mechanism; strictly speaking, artificial intelligence inspired by the inferential, mnemonic, and exploratory abilities of the human mind should not even consider such “obsolete” processes. Yet, precisely because of the importance of the same. This importance has preserved them throughout evolution. It is suitable for modern scientists and designers to take them well into account but without treating them as anomalous processes, as oddities of biological life made up of cells, proteins, molecules, DNA, etc. Emotion is a mental state peculiarly more immediate and upsetting than others, but it remains a “simple” mental state.

Is the mind a program?

When Paul and Patricia Churchland published their famous paper in which they asserted that the human mind was nothing more than a “mere” computer program, what happened in the scientific world was comparable, with all due respect, to Galileo’s presentation of the Dialogue on the Highest Systems! Immediately, two opposing factions were created. The first (the strong AI one) began to support this thesis. In contrast, the second, cautious, argumentative, and with a few more books on mathematical logic in the library, tried hard, mind you, not to show the opposite by adducing proofs of a new reality but rather by fiddling with paradoxes and some strange theorems. The argument is much simpler than we can imagine, and it is only right to summarize it to continue our discussion: take a formal system of symbols and rules, possibly introduce a few axioms (without exaggeration), and then try to prove deductively all the consequences that can be derived from it. What result will you get? Philosophically speaking, after meticulous validation work, you should reach a stable position to judge truth. For example, you should immediately say that if A + B = C, A + B = D is true if and only if C = D.

The formal system becomes a micro-universe with its planets, stars, and all the laws governing its motion. Everything is in its place, and nothing happens without elementary reasoning to validate its compatibility with the system. All doubt is banished by law, and apodictic certainty is crowned queen of reason!

Russel and Whitehead attempted something like this with their “Principia Mathematica,” it was a failure, and the most dramatic thing is that the sabotage occurred when, by then, the two scholars had pulled out thousands of propositions and were on the verge of singing the victory hymn…

Kurt Gödel, at its core, undecidable propositions: note well that it does not violate the principle of the excluded third. It simply says there is no deduction from the fundamental concepts that would allow one to reach a binary conclusion about the exhaustive truths regarding a formal system. Shortly after the publication of Gödel’s theorem, Alan Turing showed that his universal machine suffered from the same problem. That is, there were particular programs on which no other program was able to make up its mind whether they would finish their work or not; in [6], Roger Penrose discusses this problem extensively and shows with some enthusiasm that we, poor human beings, can construct an algorithm that will cycle to infinity, but, at the same time, we are certain that no other formal program will ever be able to solve the problem of stopping.

What does this mean? These two results show that the human mind is capable of making decisions (often, but not always) even when it has proved a theorem that banishes all decisions; as Penrose puts it, ” …As I have said before, much of the reason for believing that consciousness is capable of affecting truth judgments in a nonalgorithmic way comes from consideration of Gödel’s theorem. If we can realize that the role of consciousness is non-algorithmic in the formation of mathematical judgments, in which computation and rigorous demonstration are a critical factor, then no doubt we can convince ourselves that such a non-algorithmic ingredient could also be crucial to the role of consciousness in more general (non-mathematical) situations. “.

Is the mind a program? Is it possible to build a Turing machine that performs every conscious operation precisely the same way as a human being? From what has been said so far, it is clear (though not immediately understandable) that the answer tends inevitably toward the negative.

The only way to close the issue forever would be to prove the impossibility of the hypotheses, but this has never been done, and a great deal of research in the field of artificial intelligence has continued to go on assuming that any day now, this much-hyped “program of the mind” would come up.

While Penrose was scrambling for that “more” [2] that would transform the undecidable into the decidable, a sustained array of strong AI advocates were fighting a frontline battle against those (such as John Searle) who condemned not so much the algorithm itself by relying on mathematics but rather the formal symbol manipulation operation that is nothing more than the much-reviled program itself. We will discuss all this in the next section, but now let us pause on the issue supported by strong AI and try to analyze it in the light of the necessary multidisciplinarity.

In my opinion, the problem is not so much one of establishing on scientific grounds whether or not the mind is a program but instead of establishing a stable point of observation for all the psychological phenomena studied; for if one chooses the path of behavioral study, it is almost inevitable to come across more or less rigorous procedures that, starting from a set of input data, lead the subject toward the achievement of a definite goal. In almost every Cognitive Psychology text, I often encountered large scribbles that were nothing more than flowcharts, the most canonical graphical medium used to describe algorithms.

All this can only form in the reader the idea that his every material or mental action is perfectly framed within a particular scheme that is implemented by the brain when the occasion arises, even Searle himself, who, as we shall see, is the most bitter enemy of strong AI, admits, ” …we are instantiations of several computer programs and are capable of thinking. “.

It is essential, however, to emphasize the verb “instantiate,” which is not to be confused with enumerate or the like; its meaning is strongly related to the concept of an algorithm: it is a formal set of rules that, if executed correctly lead to a precise result, while instantiation is something very different there is no longer any agent blindly executing the intended tasks as they emerge from the behavior itself. Then again, the debate came to a head precisely because the Churchlands unabashedly asserted that the mind was a program, not an instantiation of it; thus, all the above schemes would no longer come to represent procedural summaries of some critical cognitive processes but the processes themselves! Even the least informed reader on the subject will quickly realize the uproar that these inferences aroused in the academic world and the unbridled joy of all AI programmers who, from that moment on, were no longer working on sterile code lists but rather on full-fledged micro-minds!

In my opinion, however, the profound effect due to this position is precisely to be found in the combination established with other branches of cognitive sciences, first and foremost psychology; what is the point of studying the neurophysiological processes of the brain?

This was the motto of the advocates of strong AI, but no one takes this the wrong way, as most psychologists preferred a “high-level” study filtered by all forms of brain processing. Sight, hearing, touch, sense of direction, etc., were (and still are) considered from the results (bottom-up) and, if anything, later, a more thorough investigation of the actual causes that were supposed to generate them.

The basic idea was that if you heard a sound, you had to have an appropriate acoustic system, whatever it might be: the ear with the ossicle system, a 1-watt loudspeaker, or, why not? A little tiny man whispering to the brain what he should hear. The computer for strong AI advocates had such a marginal role that examples were even adduced with machines built with pipes and tanks! All this could only accentuate the gap between psychology and functional physiology: the former galloped toward countless goals, while the latter stagnated in the great sea of knowledge that has filled textbooks since Golgi and Cajal.

What is a neuron? What is a neural network? What is the purpose of the corpus callosum, cerebellum, and amygdala? For many years, questions like these (especially the last one) gave way to far more apparent behavioral issues, especially considering that psychology was turning many efforts to understanding and the clinic.

The mind could thus be seen as a program, not because of evidentiary findings but because an intricate skein of necessity tied the legs of the few proponents of the genuine medical-anatomical approach. But what role does the Turing test play in all this? As we mentioned, it has been formulated as a disadvantage for machines. Moreover, it requires them to try to proclaim themselves full-fledged human beings. In short, it is a game where the peculiarities of the human mind must inevitably be encoded in a long computer program.

If this did not happen, one would create simple interview interfaces such as Weizenbaum’s ELIZA that can neither pass the test nor even reflect the behavioral implications of a human being. I believe that at present, the only way to win at the imitation game is to start from the assumption that the mind, while not being a real computer algorithm, must nevertheless be codified in terms of manipulations of formal symbols, even though one should not expect much more from it.

However, as Churchland themselves point out: ” …the kind of skepticism (about the mind as a program. Ed. GB) manifested by Searle has numerous precedents in the history of science. In the eighteenth century, Irish bishop George Berkeley found it incomprehensible that air compression waves were essential or sufficient to give objective sound. English poet and artist William Blake and German poet and naturalist Johann Wolfgang von Goethe considered it inconceivable that tiny particles could be essential or sufficient to generate the objective phenomenon of light.

Even in this century, some have found it unimaginable that inanimate matter, no matter how well organized, could constitute an essential or sufficient premise for life alone. What men can or cannot imagine often has nothing to do with reality, which happens even to brilliant people…. “. What to say about it?

The Churchlands, probably backed into a corner by a wave of malicious criticism, made the most logical argument that could be made, even before any purely rational speculation; it is clear that these statements certainly do not support their thesis (which remains at the mercy of the enemies of strong AI), but they certainly make it possible to justify the use of the programs when attempting to prove intelligence through the Turing test.

As we will see in the next and final section, the answer to the question about minds cannot be found even in the much-hyped example of the Chinese room, and we will highlight the tremendous blunders John Searle himself made in treating AI as a means of confirming a hypothetical theory of mind.

The meaning and the Chinese room

And now we come to the longed-for point … Searle’s Chinese room and his unsuccessful attempt to wipe off the face of the earth every miserable supporter of strong AI! Well, for those who do not know, it is proper to briefly preface how this experiment works [1]: ” …Suppose I am locked in a room with a large sheet of paper covered with Chinese ideograms. Suppose further that I do not know Chinese (and I do), written or spoken, and that I am not even sure I can distinguish Chinese writing from Japanese writing or meaningless gouges: Chinese ideograms are precisely meaningless.

Suppose that after this first paper in Chinese, I am given a second paper written in the same script, with a set of rules to correlate the second paper with the first. The rules are written in English, and I understand these rules as well as any other English-speaking individual.

They allow me to correlate one set of formal symbols with another; here, “formal” means I can identify symbols only by their graphic form. Suppose again that I am given a third dose of Chinese symbols along with some instructions, also in English, that allow me to correlate some aspects of this third sheet with the first two and that these rules teach me to draw certain Chinese symbols having a specific shape in response to certain types of shapes assigned to me in the third sheet. Unbeknownst to me, the people who provide me with all these symbols call the contents of the first sheet “writing,” the contents of the second sheet “history,” and the contents of the third sheet “questions.” They also call the symbols that I give them in response to the contents of the third sheet “question answers,” they call the set of rules in English that they gave me “program.” …No one from my answers alone can realize that I don’t know a single word of Chinese. …From the external point of view, that is, from the point of view of someone reading my “answers,” the answers to the questions in Chinese and those in English are equally good.

But in the case of Chinese, unlike English, I give answers by manipulating uninterpreted formal symbols. As far as Chinese is concerned, I behave no more or less like a calculator: I perform computational operations on formally specified elements. As far as the Chinese are concerned, I am simply an instantiation (i.e., an entity corresponding to its abstract type) of the computer program… “.

Let us first begin by saying that this is not an experiment in evaluating an artificial consciousness: John Searle, caged in his little Chinese room, is like a goldfish who, from inside a glass bowl, believes his macrocosm is consumed in a few cubic centimeters. Then, just out of duty to science, we will say that this proof looks so much like one of those “pure,” i.e., wholly ideal and virtual experiments often called into question by theoretical physics. We will assess how absurd its premises are without even touching with our minds all the variations, answers, and alternatives this dilemma has raised.

Of course, we can say that Searle is perfectly correct when he states that syntax cannot generate semantics: this seems to me not only evident from a linguistic point of view but also extremely rational since no rule can regulate the process of signification of a sentence expressed in a given idiom.

The syntax is thus merely a set of prescriptions that should be met for communication to take place between two members of the same cultural community; in the words of Claude Shannon, it represents the code standard to both the sender and the receiver, and its integrity is the basis of the decoding process.

I do not believe that, as the Churchlands have often reiterated, anything extraordinary can come out of syntax, at least until semantics is introduced. The Chinese room is a virtual place devoid of any connection with meanings and therefore incapable of meaning; this is the first point against Searle’s thesis: whoever said that, if indeed the mind were a program, it should limit itself to manipulating symbols without making any association with conscious reality?

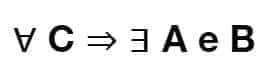

We are debating artificial intelligence, not pure mathematics! We can think of constructing a program that, while manipulating symbols, operates at the same time with the objects associated with them and thus gains awareness of them: to clarify our ideas, let us think of a simple formal system consisting of three symbols { A, B, C } and introduce a biunivocal function (let’s call it f(x)) that associates three images with each of them, for example (in order) a vase, a pile of dirt and a flower.

Now let us analyze the proposition:

That is: for every flower (C) there must be a vase (A) filled with soil (B). The rule written above is expressed according to the formal language of logic. Still, by mental associations, it is possible to imagine the situation by thinking directly of the superposition of f(A), f(B), and f(C), or, in less abstract terms, of a small picture in which a vase with a flower is depicted; the only a priori condition-which, however, can only be experienced-is the necessity of A and B for C to be able to keep itself. With this example, I wanted to show that even if one does not know the formal system, it is possible to use it, perhaps with the help of a good interpreter, based solely on the consciousness of facts or, rather, meanings.

Where is the meaning of the Chinese room? Try as I might, I see only manipulations of symbols, and that doesn’t surprise me all that much because that is precisely what Searle desires… However, for the sake of intellectual honesty, it must be said that a mind, as one wishes to understand it, cannot disregard the manipulation of meanings, which, in turn, arise primarily from interaction with the external environment. Wanting to build a machine according to the criteria of strong AI, in my opinion, one should not indulge in a huge set of rules operating on scribbles commonly called symbols: such a computer would be able to do many good things and perhaps even pass the Turing test, but it could never be called intelligent in the sense of the term we humans use.

What has happened is a misunderstanding on both sides: the Churchlands proposed a visionary theory of mind, and, for his part, John Searle responded with a counterexample that was perhaps only real in the little room! The biggest mistake the former made was attributing an extraordinary causal power to the symbol, which is strange given the arbitrariness of formal systems. At the same time, the latter not only postulated the impossibility of having a mind devoid of semantics but also skillfully avoided its use in formulating his famous example.

For an Italian (or an Englishman), Chinese ideograms will always remain more or less pretty traces on a piece of paper, nothing more; no external system with them as its domain and codomain will ever be able to extract clarifying information about why those symbols were traced.

No matter how much effort one puts into evaluating all possible alternatives (such as the system’s response formulated by Berkeley researchers), no intellectual force can bend the barriers that separate the world from representations, but Searle did not say this. He kept silent about it for reasons unknown to me, but certainly with the (false) result of sharpening the scope of the criticism against strong AI advocates.

Now, reassess the question, “Is the mind a program ?” Searle himself answers when asked if a digital calculator can think that: ” …If by “digital calculator” we mean anything for which there is a level of description at which it can be properly described as an instantiation of a calculator program, then the answer is (again), of course, yes, since we are instantiations of many calculator programs and are capable of thinking. “.

On this point, I fully agree with Searle and evidently cannot but despise the assumption that my mind as such is a program, not least because” …The distinction between the program and its realization in the computer circuits seems to correspond to the distinction between the level of mental and brain operations. If we could describe the level of mental operations as a formal program, then it would seem we could describe the essence of the mind without resorting to either introspective psychology or the neurophysiology of the brain. But the equation “mind is to the brain as program is to hardware” holds water in several places…. “.

From this, it is evident what the word “instantiate” means, and without forcing the words too much, it seems to me that the transition from a list of instructions to an instance of the same can only and exclusively take place through those processes of associative signification I mentioned above.

However, there is one mole worth insisting on a little more. A prevalent and even correct conception sees artificial intelligence not only as a pseudo-technological product but as a basis of inquiry for cognitive science. Of course, I would like to point out that we are not talking here about a simulation of mental processes, a practice also well accepted by Searle and expounded very well in [15], but rather about the construction of organisms that possess the mental faculties one wishes to study.

The Californian philosopher seems to get off the hook in this area: ” …The study of the mind starts with facts such as that men have beliefs while thermostats, telephones, and adding machines have none. …Beliefs having the possibility of being strong or weak, nervous, anxious or firm, dogmatic, rational or superstitious; blind faiths or wavering cogitations; all sorts of beliefs. …”.

First, the first statement is purely illogical in that it is not even scientifically correct to define a science negatively; one cannot say that meteorology does not study atomic reactions, propagation of radio waves, etc. The only rational way to proceed is to lay a positive foundation and build brick by brick a firm theory; and besides, whoever said that my thermostat has no convictions?

Is there a physical law that limits the concept of “belief” to a privileged sphere such as human beings? I think not, but I would love to ask Professor Searle what goes through his mind when he thinks about a belief… He would probably refer me back to the end of his paper “Minds, Brains and Programs” to have me reread what I, with a bit of cleverness, have already quoted in this article: beliefs must have attributes (reread them. I don’t feel like wasting any more words), that is, they must be able to be cataloged in a highly abstract way within cultural patterns that are far from primitive. In short, to put it bluntly, in those few words lies a concentration of anti-science that would make even a fortune teller cringe!

But then, why is my thermostat not convinced of the temperature? Searle’s answer is oversimplified and humanitarian (vis-à-vis AI advocates). Otherwise, the mind would be everywhere, and the only suitable discipline for the study of this psycho-all should rightfully be a panpsychic philosophy.

My version is slightly different, and in these few remaining lines, I will try to set it out as clearly as possible.

Suppose, just so as not to change the subject, that we have a thermostat; it is normally equipped with a temperature sensor that, for simplicity, we consider a thermistor, that is, a resistor that changes its resistance with an almost linear law with temperature.

If, for example, at 20° you have R = 200 Ohms, at 35° perhaps R will have risen to 1500 Ohms; it does not matter how the function evolves. What matters is that there is a well-defined physical relationship linking the ambient temperature with a particular parameter- an internal state, to be exact. Keeping in mind Ohm’s famous law V = RI, if we run a constant current through the thermistor (e.g., with a transistor), the voltage that will be present at its ends will, in turn, be proportional to the temperature and, if we do things right and eliminate the various constants, we can say that V = T. When the device is on there will always be a V at the ends of R. Thus the system will have a continuous (in a mathematical sense) internal state variable whose dynamics over time describes the temperature trend in the surrounding environment.

At this point, the dilemma arises: according to Searle, the thermostat knows nothing about temperature, but according to me, it has a much deeper awareness than one might imagine. The cleavage point arises from the kind of knowledge being examined. From an ontological and gnoseological point of view, I believe that few people in the world would be able to define what temperature is. Still, I am sure anyone can estimate its value at any time of the day and anywhere.

If a person knows that the temperature is low, say eight °C, she believes in the strict sense. In contrast, if the thermostat possesses an internal value of 8,0002 °C, well, according to Searle’s statement, it is entirely unconscious. This seems like a significant inconsistency that can only arise from axiomatizing an exclusionary principle (machines cannot have beliefs) without rights. I am convinced when I can verify (by any means, even the acceptance of a dogma) the object of my conviction. It does not matter if some attributes or accouterments can be correlated with my internal state since they are attributed by a higher unit that, based on a variety of factors, including experience, can give rise to a new internal state (a state of the state) correlated with one of the parameters exhaustively listed by Searle.

Again, the threat of panpsychism philosophy is unfounded since the mind can certainly not be present only on the condition that there are beliefs. It makes no sense to avoid a cognitive catastrophe, to deliberate that the possessors of it should be those who process information in a certain way. In contrast, all others are mere automatons devoid of any existential purpose.

My thermostat does not have a mind, but it certainly possesses an element that makes it capable of interacting with the environment, just as some microorganisms possess cilia and flagella to ambulate and phagocytize their food.

But then, is there a boundary between a set of internal states and a mind? Unless you want to bother Descartes with his res cogitans, I believe that no such violent “rifts” have occurred in evolution as to lead an ape or hamster to become humans with minds; I want to assume that in this case, the number is the element, if not crucial, certainly the predominant one in the phylogeny of the mind: today a brain of homo sapiens sapiens possesses about 150 billion active units (the neurons). Each of them dialogues through neurotransmitters and neuromodulators also with twenty thousand other cells, have machines of this size ever been made?

The answer is no! And it makes no sense at all what Massimo Piattelli Palmarini says in [4] when he says that connectionism brought many failures when the experiments he cited used computers that simulated at most a few hundred artificial neurons…

I often get the impression that you want to prove Mr. Universe’s strength by having him beat a baby who barely takes his first steps, and at the first inhuman knockout, an angry crowd jumps to its feet, shouting that he was right. This is not meant to be an apologia for connectionism (although it is), but as I have said before, the scientific approach that starts by abstracting-as linguistics does, for example-is unlikely to lead us to real results, that is, results corroborated by experimental evidence. I don’t give a damn if someone comes along and tells me fairy tales about general grammars or semiotic triangles if I don’t first get an explanation as to why a child of just over 18 months not only can speak but can understand and, much to John Searle’s triumph, have beliefs. Has a linguist ever studied Broca’s and Wernicke’s areas? Yet people with aphasias of any kind always have lesions in those areas of the brain…

I believe that it is much better to study the mind using machines programmed in the most appropriate way (an artificial neural network is, after all, also a program) without fearing that some mad scientist will thwart our efforts by locking us inside a Chinese room and without being discouraged by the exponential power that real brains present. I want to paraphrase the title of a beautiful book [22] by Nobel Laureate Rita Levi Montalcini: ” A star does not constitute a galaxy, but stars constitute a galaxy! “.

Bibliographical references

-

- [1] Hofstadter D., Dennet. D., L’io della Mente, Adelphi

- [2] Searle J., Il Mistero della Coscienza, Raffaello Cortina

- [3] Von Neumann J. Et alt., La Filosofia degli Automi, Boringhieri

- [4] Piattelli-Palmarini M., I Linguaggi della Scienza, Mondadori

- [5] Davis M., Il Calcolatore Universale, Adelphi

- [6] Penrose R., La Mente Nuova dell’Imperatore, SuperBur

- [7] Breuer H., Le Radici della Paura, Mente&Cervello n.8 – Anno II

- [8] Dennet. D., La Mente e le Menti, SuperBur

- [9] Pinker S., Come funziona la mente ?, Mondadori

- [10] Aleksander I., Come si costruisce una mente, Einaudi

- [11] Bernstein J., Uomini e Macchine Intelligenti, Adelphi

- [12] Eco U., Kant e l’ornitorinco, Bombiani

- [13] Minsky M., La Società della Mente, Adelphi

- [14] Brescia M., Cervelli Artificiali, CUEN

- [15] Parisi D., Simulazioni, Il Mulino

Sul connessionismo sono disponibili vari testi in lingua italiana, tra i quali:

-

- [16] Floreano D., Mattiussi C., Manuale sulle Reti Neurali, Il Mulino

- [17] Cammarata S., Reti Neuronali, Etas Libri

- [18] Parisi D., Intervista sulle Reti Neurali, Il Mulino

- [19] Parisi D., Mente. I nuovi modelli di vita artificiale, Il Mulino

Sulla neurofisiologia del cervello e della mente e sul cognitivismo:

-

- [20] LeDoux J., Il Sè sinaptico, Raffaello Cortina

- [21] Oliverio A., Prima Lezione di Neuroscienze, Laterza

- [22] Oliverio A., Biologia e Filosofia della Mente, Laterza

- [23] Montalcini R. L., La Galassia Mente, Baldini&Castoldi

- [24] Boncinelli E., Il Cervello, la mente e l’anima, Mondadori

- [25] De Bono E., Il Meccanismo della Mente, SuperBur

- [26] Bassetti C. Et Alt., Neurofisiologia della mente e della coscienza, Longo

- [27] Legrenzi P., Prima Lezione di Scienze Cognitive, Laterza

- [28] Neisser U., Conoscenza e Realtà, Il Mulino

The essay is also featured in the volume“Saggi sull’Intelligenza Artificiale e la Filosofia della Mente.”

Photo by David Matos